The Workshop: S3 Storage with MinIO - php[architect] Magazine January 2021

Joe • June 11, 2021

learning phparch writing php s3 data storageWarning:

This post content may not be current, please double check the official documentation as needed.

This post may also be in an unedited form with grammatical or spelling mistakes, purchase the January 2021 issue from http://phparch.com for the professionally edited version.

The Workshop - S3 Storage with MinIO

This month we’re diving into running our own S3 compatible open-source server via the open-source project MinIO. We’ll configure MinIO alongside our local development environment so we can easily replicate the integration of our application with S3 object storage without operating on “production” storage buckets or having to setup “dev” buckets.

Amazon’s Simple Storage Service (Amazon S3) is a widely used cloud storage product offered by Amazon Web Services (AWS). Amazon S3 offers secure, reliable, and scalable file storage which can be used to store web application data such as user-uploaded images or documents. You can easily start small and scale up storage options within S3 buckets, which are remote endpoints where file objects can be stored and retrieved. You can easily create buckets to group similar data or isolate data based on its access requirements. You can also use S3 buckets to serve static HTML websites.

While Amazon S3 is certainly the best known S3 offering it isn’t the only game in town. Platform-as-a-service provider Digital Ocean also provides S3 compatible access to their Spaces storage product. Google’s Cloud Storage supports S3 compatibility as well, however, Microsoft’s Azure Blob storage is not. There are options for Azure users to use an S3 proxy to convert between S3 and Azure Blog Storage.

As application developers, we run into the use case of storing user-generated files which could be image avatars, documents such as spreadsheets or PDFs, or even audio or video recordings of meetings. Traditionally these types of files have been saved and stored on the webserver the application was running on. Over time, it became much more common to see these webservers have shared storage via Network Filesystem (NFS) or other network-accessible block storage. This type of storage was somewhat expensive because the data is sitting on a spinning platter hard drive. In addition to spinning platter hard drives, it’s common to find services using Solid State Hard Drives (SSDs) to provide block storage to provide faster read and write times to lower latency as much as possible on the network between the physical disk and the application. Even more recently we’ve seen the container ecosystem cause a big shiftin the way our applications are run. One aspect of running your application in containers is that you don’t typically have a large disk readily available for reading or writing to.

If this is your first time experimenting with S3 it’s important to realize S3 is object storage, not a block or file storage filesystem you may have more experience with. You’re already familiar with file storage: you have a filesystem on your computer’s hard drive which allows you to create a filing cabinet in which you can store files. This type of storage requires a server to be attached in order to control and maintain the filesystem and anything written or read. Think of this type of storage like a USB drive plugged into your computer directly or a drive connected to another computer on your network mapped to your local filesystem. Block storage is similar however files are broken down into “blocks” which is given a unique identifier that allows the storage system to place it wherever it can be most conveniently accessed. When a file is read, the system performs a lookup to respond with the locations of all the blocks belonging to the requested file which is then put back together and “read” by the user or application. NFS, Samba, and other direct systems designed to connect disks to computers across networks and allow for access control to provide security to only allow users who have been granted access to read and write to the filesystems are examples of block storage and/or filesystem storage.

Object Storage or object-based storage is a method of storing files in discrete, self-enclosed repositories that own the data they contain and can be spread across distributed file systems. S3 objects also feature-rich metadata attributes that can be used to store properties of a file for later reference. Commonly we can find Content-Type and Content-Length (represented in bytes) which can allow us to know about the file without having to download it. We can get the type of file and the size in bytes from the metadata. The storage operating system can then search and index metadata allowing users to easily search and find files they’re looking for. One major drawback of object storage is the objects cannot be modified. Objects can be overwritten, but objects must be written entirely when stored. This is because they are broken down into smaller objects and spread across the system which could be isolated to a specific datacenter, or be mirrored and spread to object storage systems across the world where users will be able to access the files from whatever datacenter has the better latency to them.

Getting started with MinIO

MinIO is an open-source project with a company behind it offering enterprise services that complement the open-source offering such as long term support, security reviews, as well as guided updates for new releases and commercial licenses. To get started we’re going to explore the free Community version which is licensed as GNU AGPL v3.

Note: We’re using MinIO locally in a controlled environment so I’m not using SSL/TLS in these examples. If you do need to use MinIO across public networks you can learn more about running TLS with MinIO.

MinIO is made up of two parts: a client and a server, we’ll install both into my Ubuntu 20.04 LTS development system. These are the generic Linux instructions that should work on most modern distributions. Running MinIO we will pass in the access key and secret values we’ll use to authenticate. If you’re doing this in a production environment please ensure you’re protecting these secrets! We also pass the path we’ll use as the root of our object store “/data/” for our examples.

$ wget https://dl.minio.io/server/minio/release/linux-amd64/minio

$ chmod +x minio

$ sudo mv minio /usr/local/bin/.

$ minio --version

minio version RELEASE.2020-12-18T03-27-42Z

$ wget https://dl.min.io/client/mc/release/linux-amd64/mc

$ chmod +x mc

$ sudo mv mc /usr/local/bin/.

$ mc --version

mc version RELEASE.2020-12-18T10-53-53Z

$ MINIO_ACCESS_KEY=phparch MINIO_SECRET_KEY=s3storage minio server /data

macOS users can install MinIO with Homebrew:

$ brew install minio/stable/minio

$ minio --version

minio version RELEASE.2020-12-18T03-27-42Z

$ brew install minio/stable/mc

$ mc --version

mc version RELEASE.2020-12-18T10-53-53Z

$ MINIO_ACCESS_KEY=phparch MINIO_SECRET_KEY=s3storage minio server /data

If you’re not ready to install the binaries you can easily run MinIO from Docker with the following command:

$ docker run -p 9000:9000 \

-e "MINIO_ACCESS_KEY=phparch" \

-e "MINIO_SECRET_KEY=s3storage" \

minio/minio server /data

Attempting encryption of all config, IAM users and policies on MinIO backend

Endpoint: http://172.17.0.2:9000 http://127.0.0.1:9000

Browser Access:

http://172.17.0.2:9000 http://127.0.0.1:9000

Object API (Amazon S3 compatible):

Go: https://docs.min.io/docs/golang-client-quickstart-guide

Java: https://docs.min.io/docs/java-client-quickstart-guide

Python: https://docs.min.io/docs/python-client-quickstart-guide

JavaScript: https://docs.min.io/docs/javascript-client-quickstart-guide

.NET: https://docs.min.io/docs/dotnet-client-quickstart-guide

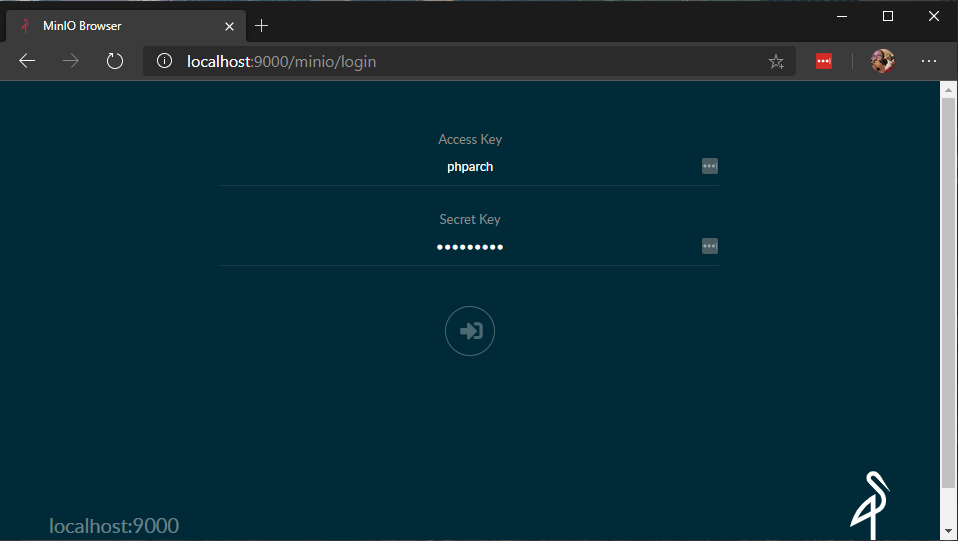

With this docker command, we’ve spun up a containerized MinIO server and set the access key and secret key We can navigate to the Browser Access URL. For me the browser access URL for the docker container is running inside Windows Subsystem for Linux (WSL). This is why we see http://172.17.0.2:9000 as the URL. I know 172.17.0.2 is the WSL container IP so I’m able to access the MinoIO server via my browser at http://localhost:9000. Using our keys from the docker run command we can log in:

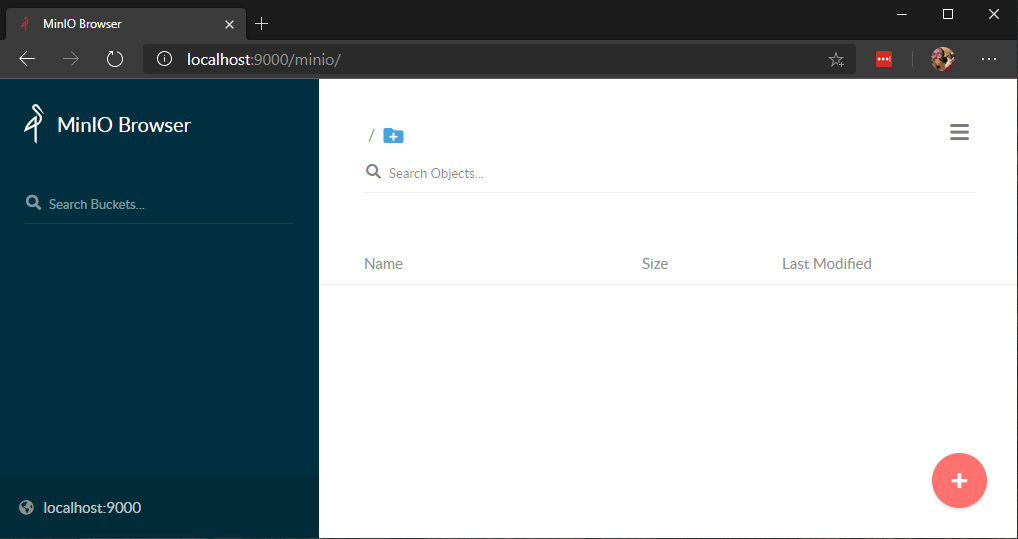

Once logged in we can see we have no objects yet.

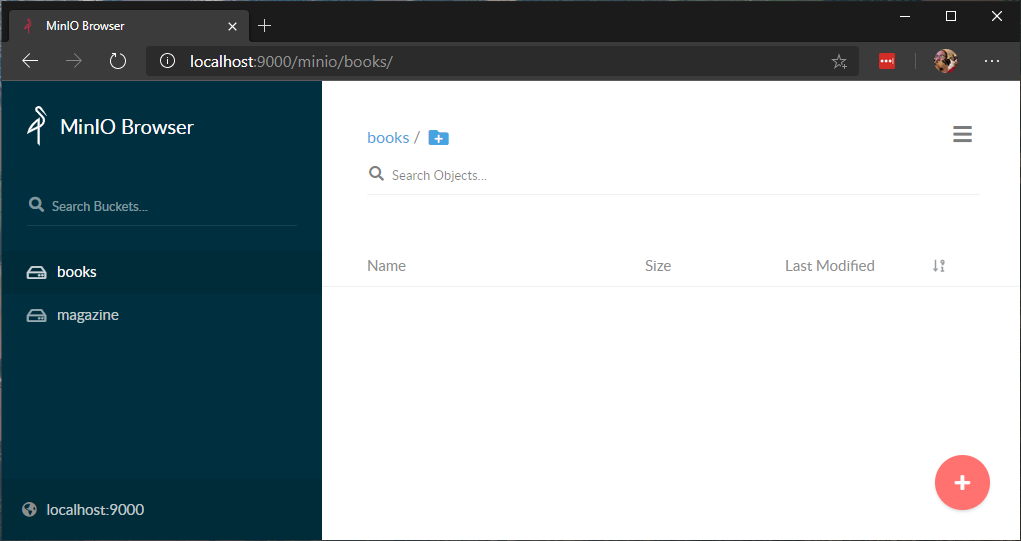

We’ll go ahead and create two buckets “books” and “magazine” so we can start working with files on our S3 server:

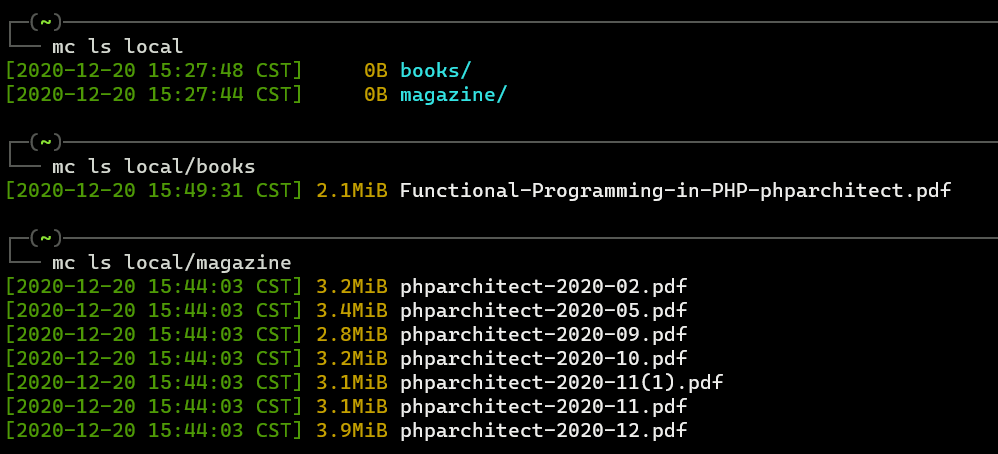

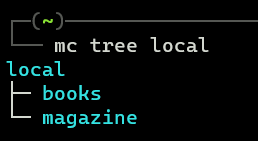

Back to our terminal, we can use the MinIO client to set up an alias so we can run commands on the console without repeating our access secrets each time. We want to create an alias we’ll call “local” and point it at our running MinIO server then we’ll run mc ls local which tells the client to run the ls command on the local alias:

$ mc alias set local http://localhost:9000 phparch s3storage

$ mc ls local

[2020-12-20 15:27:48 CST] 0B books/

[2020-12-20 15:27:44 CST] 0B magazine/

In my local ~/Downloads folder I have PDFs of phparch.com magazines and a book:

$ ls -alh | grep pdf

-rwxr-xr-x 1 halo halo 2.2M Dec 20 15:39 Functional-Programming-in-PHP-phparchitect.pdf*

-rwxr-xr-x 1 halo halo 3.3M Dec 20 15:39 phparchitect-2020-02.pdf*

-rwxr-xr-x 1 halo halo 3.5M Dec 20 15:39 phparchitect-2020-05.pdf*

-rwxr-xr-x 1 halo halo 2.9M Dec 20 15:39 phparchitect-2020-09.pdf*

-rwxr-xr-x 1 halo halo 3.2M Dec 20 15:39 phparchitect-2020-10.pdf*

-rwxr-xr-x 1 halo halo 3.2M Dec 20 15:39 phparchitect-2020-11(1).pdf*

-rwxr-xr-x 1 halo halo 3.2M Dec 20 15:39 phparchitect-2020-11.pdf*

-rwxr-xr-x 1 halo halo 3.9M Dec 20 15:39 phparchitect-2020-12.pdf*

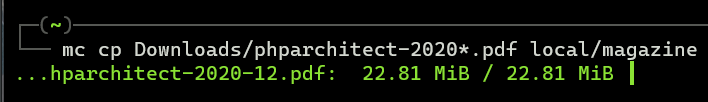

We can copy files into our magazine bucket by using:

mc cp Downloads/phparchitect-2020*.pdf local/magazine

Note that it supports the use of wildcards.

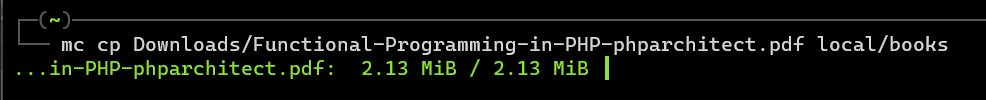

We’ll use the same syntax but with the books bucket instead of magazine with the command:

mc cp Downloads/Functional-Programming-in-PHP-phparchitect.pdf local/books

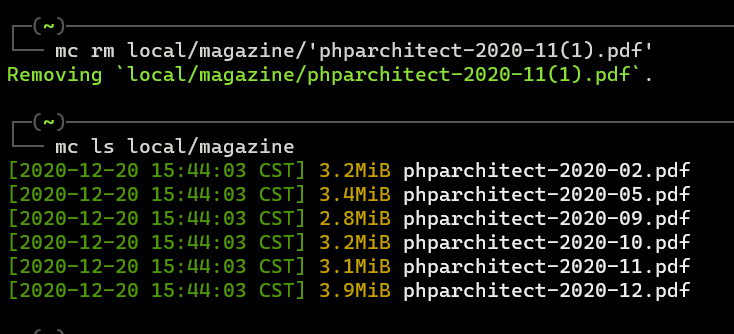

We can now use mc ls to list objects in our buckets to see our PDFs have been stored in our local alias which is pointing to my Minio Server running in a Docker container.

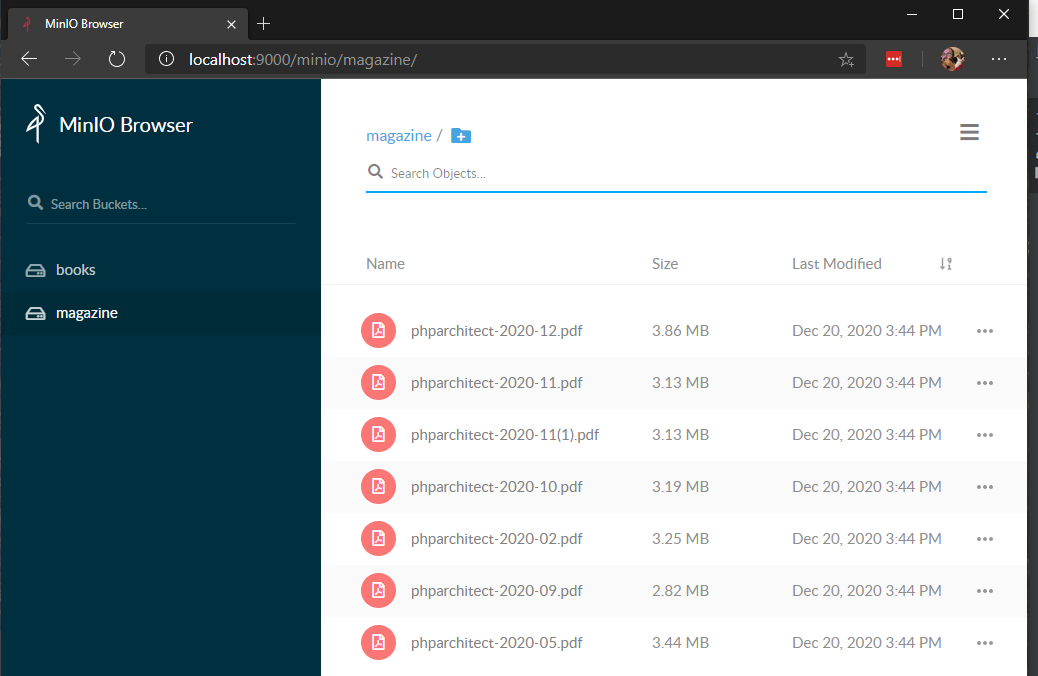

Refreshing our MinIO server in our web browser we can see our magazine files in the magazine bucket:

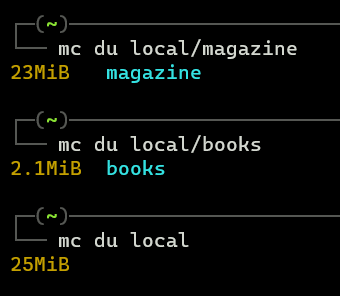

MinIO allows us to create shareable links that can be set to expire up to 7 days in the future which is helpful to create short-lived download links to files as needed. We can monitor disk usage with the MinIO client using the du subcommand:

We can also use the tree subcommand to get a tree view of our bucket to see the directory structure.

You might have noticed we have an extra copy of phparchitect-2020-11(1).pdf in our magazine bucket. This is because I downloaded the issue twice. We can remove this duplicate file by running the following command:

mc rm local/magazine/'phparchitect-2020-11(1).pdf'

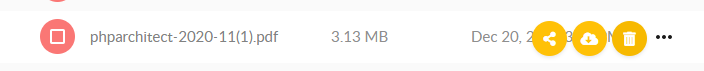

In the MinIO browser you can click on the ellipsis on the item you want to delete and click the trash can icon:

Connecting MinIO to our Application

Now that we have our MinIO server running locally we need to connect it to the other parts of our local development environment so our applications and other services can access our S3 storage server. The mechanism we’ll use for accessing our S3 storage from our application will be to use thephpleague/flysystem which is a fantastic PHP package that provides one interface for dealing with many different types of filesystems, including S3. Frank de Jonge is the author of Flysystem and many frameworks include it as their foundation for filesystem access. Laravel includes Flysystem and also adds some syntactic sugar for making it even easier to work with S3 buckets in our application including using AWS’s SDK streams as well as drives connected via FTP/SFTP.

I have created a brand new Laravel 8 application with

composer create-project laravel/laravel local-s3

We’ll add our MinIO S3 connection information to config/filesystems.php. The default S3 configuration in this file includes the environment variables from our .env for AWS. We can reuse and use our MinIO values:

.env:

AWS_ACCESS_KEY_ID=phparch

AWS_SECRET_ACCESS_KEY=s3storage

AWS_DEFAULT_REGION=us-east-1

AWS_BUCKET=magazine

AWS_ENDPOINT=http://127.0.0.1:9000

AWS_URL=

config/filesystems.php:

's3' => [

'driver' => 's3',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'bucket' => env('AWS_BUCKET'),

'url' => env('AWS_URL'),

'endpoint' => env('AWS_ENDPOINT'),

],

Note: To use the S3 driver in Laravel ensure you add

"league/flysystem-aws-s3-v3": "^1.0"to yourcomposer.jsonand runcomposer update. This package is not included with Laravel by default.

We can use Laravel’s Storage facade to interact with our S3 bucket. Based on our configuration we’ve automatically connected to the magazine bucket. This is not very flexible and it has forced us to create S3 connections in our application based on buckets. Since we already have two buckets we can refactor our config/filesystems.php to use s3-magazine and s3-books named connections which will be pointed at our two existing buckets:

's3-magazine' => [

'driver' => 's3',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'bucket' => env('AWS_MAGAZINE_BUCKET'),

'url' => env('AWS_URL'),

'endpoint' => env('AWS_ENDPOINT'),

],

's3-books' => [

'driver' => 's3',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'bucket' => env('AWS_BOOK_BUCKET'),

'url' => env('AWS_URL'),

'endpoint' => env('AWS_ENDPOINT'),

],

Whenever we want to interact with one of these buckets, we can tell Laravel’s Storage facade which one we want to use. We can list the files in the magazine bucket from inside our Laravel application via:

>>> $files = Storage::disk('s3-magazine')->allFiles();

=> [

"phparchitect-2020-02.pdf",

"phparchitect-2020-05.pdf",

"phparchitect-2020-09.pdf",

"phparchitect-2020-10.pdf",

"phparchitect-2020-11.pdf",

"phparchitect-2020-12.pdf",

]

>>> $files = Storage::disk('s3-books')->allFiles();

=> [

"Functional-Programming-in-PHP-phparchitect.pdf",

]

>>>

So far, our use case focuses on storing php[architect]’s books and magazines in a protected S3 bucket. The part of the application responsible for allowing users to download books and magazines could also use the same Storage facade. It would download a file from S3 and generate a response to the user with their requested file download directly to their browser:

// Authenticate and Authorize the user’s request

$headers = [];

return Storage::disk('s3-magazine')->download(‘phparchitect-2020-12.pdf’, ‘phparch-magazine-2020-12.pdf’, $headers);

This will create a response with the supplied $headers and download the file from the magazine S3 bucket in our MinIO server to the user’s system named “‘phparch-magazine-2020-12.pdf’”

Connecting MinIO to Other Parts of Your Development Environment

Using Docker in WSL, you can see my endpoint uses 127.0.0.1 which is “localhost”. Networking with containers can sometimes be confusing on how to connect services together. Since Docker shares our MinIO server on port 9000, any container or service running on our local system should be able to connect to localhost and port 9000 to interact with MinIO. If you’re using Docker as well, you should most likely also use localhost as I am in these examples.

If you are using Vagrant to host your PHP application, I recommend using MinIO inside the virtual machine to simplify the connections. Within a contained VM the host should be “localhost”. You can easily test drive MinIO in Homestead’s Vagrant environment.

I hope this has demystified some of the complexity of working with S3 storage with your local applications. Remember the cloud is just someone else’s computer, and S3 is just someone else’s hard drive!

Warning:

This post content may not be current, please double check the official documentation as needed.

This post may also be in an unedited form with grammatical or spelling mistakes, purchase the January 2021 issue from http://phparch.com for the professionally edited version.

As Seen On

Recent Posts

- PHP to Rust via Copilot

- Compiling Python 3.12 from Source Tarball on Linux

- HTML Form Processing with PHP - php[architect] Magazine August 2014

- The Workshop: Local Dev with Lando - php[architect] Magazine November 2022

- Getting back into the swing of things

- All Posts

Categories

- ansible

- apache

- applesilicon

- aws

- blackwidow

- cakephp

- community

- composer

- conferences

- copilot

- data-storage

- day-job

- devops

- docker

- fpv

- general

- github

- givecamp

- homestead

- jigsaw

- joindin

- keyboard

- laravel

- learning

- linux

- maker

- mamp

- mentoring

- music

- nonprofit

- opensource

- packages

- php

- phparch

- projects

- provisioning

- python

- razer

- rust

- s3

- security

- slimphp

- speaking

- static-sites

- storage

- testing

- tiny-whoop

- today-i-learned

- training

- ubuntu

- vagrant

- version-control

- windows

- writing

- wsl

- wsl2

- zend-zray